How to avoid failures in IoT designs

10 January 2019

Shutterstock image

Developing a new IoT-ready device can lead to some special challenges, even for experienced design engineers. Whether it’s wearable technology, a home automation gadget, or an intelligent sensor for an industrial process, there are a number of design pitfalls awaiting the unwary, which, however, can be avoided by careful foresight and planning. In this article, we look at some of these issues; what can happen, and how to avoid it.

Note that we are focusing on edge devices such as sensors, actuators and routers, rather than the infrastructure aspects of the IoT – long-haul communications over the Internet, and cloud computing resources – as the issues related to these are somewhat different.

An IoT edge device’s success from installation through to the end of its operational life calls not only for good design and manufacturing, but also adequate deployment and provisioning. Provisioning means timely battery replacement if applicable, and an ability to accept and incorporate software/firmware updates as required.

Accordingly, we start at the beginning – the initial project planning – and then move on to look at the issues that can arise during design, production and deployment of IoT edge devices.

Planning the project

According to a Cisco study completed in May 2017, 60 percent of IoT initiatives stall at the Proof of Concept (PoC) stage and only 26 percent of companies have had an IoT initiative that they considered a complete success. Additionally, a third of all completed projects were not considered a success.

Commenting on this, a Tech Target ‘IoT Agenda’ blog post suggests that such failures may be due to new and untested business models, against a background of a lack of proven strategies. Instead of running guns blazing into IoT projects, businesses can prevent failure by taking a gradual ramp-up approach, and testing their PoCs can play a vital role in that approach. As tempting as it might be to scale as quickly as possible to beat potential competition, teams can reap far greater benefits by testing use cases in live environments on a small scale, adopting learnings and making changes before proceeding to full-scale development and deployment.

The project team will also be challenged if it has too many learning curves to master in one project; there can be project delays and missed deadlines as engineers’ focus is taken away from their core competencies. While putting sufficient budget and staffing levels into place is essential, it’s equally important to ensure that the project team has the right skill sets.

In fact, some of the toughest skill sets to hire for are in the highest demand for IoT projects. When asked about technological skills necessary for IoT success, and the difficulty faced in hiring for those skills, IoT professionals ranked data analytics and big data first (75 percent and 35 percent), followed by embedded software development (71 percent and 33 percent) and IT security (68 percent and 31 percent).

One response to these issues can be to simplify the development process as much as possible by taking advantages of development environments such as the Raspberry Pi ecosystem. Farnell’s Raspberry Pi resource, for example, includes starter packs as shown in Fig.1, boards with wireless compliance, expansion boards, enclosures, cables, connectors and other accessories – including audio and visual products – that look after basic integration issues and allow designers to focus on their core expertise as fast as possible.

Complying with standards

An IoT edge installation can contain a mixture of device types, along with a router or gateway platform to handle communications between all the devices and the wider IoT infrastructure. Therefore, a Network World article recommends that project designers should choose a capable IoT platform that supports an extensive mix of protocols for data ingestion. The list of protocols for industrial-minded edge platforms generally includes brownfield deployment staples such as OPC-UA, BACNET and MODBUS as well as more current ones such as ZeroMQ, Zigbee, BLE and Thread. Equally as important, the platform must be modular in its support for protocols, allowing customisation of existing and development of new means of asset communications.

More detailed information on IoT standards issues is available by reference to the IEEE’s 2413 Project – A standard for an Architectural Framework for the IoT. Commenting on this project, the IEEE says:

“The Internet of Things (IoT) is predicted to become one of the most significant drivers of growth in various technology markets. Most current standardisation activities are confined to very specific verticals and represent islands of disjointed and often redundant development. The architectural framework defined in this standard will promote cross-domain interaction, aid system interoperability and functional compatibility, and further fuel the growth of the IoT market. The adoption of a unified approach to the development of IoT systems will reduce industry fragmentation and create a critical mass of multi-stakeholder activities around the world.”

Which processor chip?

Most IoT products depend on having exactly the right level of processing power. Clearly, insufficient capability will render them unable to handle their target application. However, if the processor is too powerful, it can cause problems related to PCB real estate, cooling, power consumption and cost.

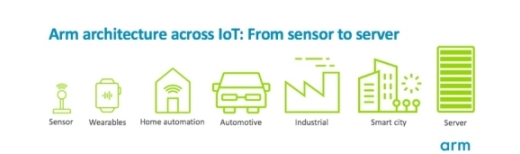

One broad approach to finding the right CPU for your application is to review a wide-ranging processor family, such as Arm’s Cortex series designed to cover IoT applications from sensors to servers, as shown in Fig.2.

The Cortex-A32 processor for embedded IoT applications is scalable, and backwards compatible with earlier devices; these considerations are important in ensuring that your product can be easily evolved to remain competitive or take advantage of new opportunities. The processor is rated for IoT applications such as industrial, healthcare, home automation and wearables. It supports many rich operating systems, real-time operating systems (RTOS) and related firmware, together with a selection of development tools and silicon vendors. It also offers a 25 percent power efficiency improvement over the Cortex-A7 processor.

A spectrum of other Cortex-M processors offers a range of capabilities as shown:

• Cortex-M0: Lowest area

• Cortex-M0+: Highest energy efficiency

• Cortex-M3: Energy-performance balance

• Cortex-M4: Blended MCU and digital signal processing

• Cortex-M7: Highest performance

Arm’s newest Cortex-M processors, Cortex-M23 and Cortex-M33 build upon the success of the Cortex family of processors and bring Arm TrustZone security isolation to even the smallest of devices. As outlined later in this article, security is a key consideration of IoT.

Configurable processors: In some applications, such as wearable devices, achieving the right power/performance trade-off can be so challenging that using a processor that is configurable and extendable as well as low power becomes essential. Such processors can be tuned in terms of their internal architecture (the number of registers, the type of multiplier, and the number of interrupts and levels, etc.) to achieve the exact balance between performance and power required.

The Synopsys DesignWare ARC EM4 Processor, with a configurable and extensible 32-bit RISC microarchitecture, was developed to address the power/performance paradox in IoT and other applications. It can be optimised with configurable hardware extensions for a sensor application, for instance, specifically aimed at reducing power or energy consumption.

Fig.1: Raspberry Pi 3 Model B+ starter pack

A typical wearable fitness band monitors metrics such as steps walked or run, heart rate, calories burned, and quality of sleep. The ARC EM4 Processor would be used to filter and process multiple sensor data and then provide the results to a Bluetooth wireless transceiver. It also manages power and system control functions across the device.

Memory selection priorities and types

The rise in IoT devices has triggered an evolution in technology for memory as well as other components. Accordingly, many memory options are now available. Making the right choice depends on your particular project’s priorities. According to the Java community blog, Jaxenter, considerations to be weighed up include:

• Cost. Cost is a concern in any project; the more expensive the memory selection, the more expensive the final device. Depending on the market, you need to weigh the cost vs. performance options.

• Size. Most IoT devices are small, so the embedded technology must also be small. The amount of space required for memory processing must also be kept to a minimum, as the more silicon wafer space required, the more costs go up.

• Power Consumption. Most IoT devices either run on small batteries or rely on energy harvesting for recharging. Therefore, it’s important to consider the power consumption of the memory selection, and choose an option that uses the least power and lowest voltage, both in use and during standby.

• Startup time. Users want excellent device performance, so memory must support a quick startup. Implementing a code-in-place option, which allows the device to execute code directly without needing to copy operating code from a separate EEPROM chip reduces the time required to boot up, as well as the cost of the chip since there is less need for RAM with substantial on-chip storage.

Keeping these points in mind will allow you to make the right memory selection for an IoT device – but what are the options?

Traditional External Flash Memory: Traditional external flash memory is inexpensive, reliable, and flexible, offering a high degree of density and the ability to execute in place without using too much power. Flash memory falls into two categories: NOR Flash and NAND Flash. NOR supports execution in place (XIP), while NAND does not. NAND tends to be best suited for data heavy applications, such as wearable devices, which require cheap, high-capacity storage, while NOR Flash is generally used for devices like GPS or e-readers that do not require as much memory.

Embedded Flash Memory: Embedded flash memory (also known as eFlash) as in Fig.4 is becoming more popular in IoT devices in which applications store critical data and code. Some experts predict that eFlash will become the most common type of IoT memory, given its high levels of performance and density, which allows it to support most microcontroller applications. This type of memory is also very flexible and can be programmed in the field.

Multichip Package Memory: Multichip package (MCP) memory combines the CPU, GPU, memory, and flash storage in one chip. Currently, MCPs are common in smartphones and tablets, but they offer more power and more density for IoT devices. Engineers are currently developing MCPs that use less power but offer the same level of flexibility and computing ability.

Multi-Media Cards: Embedded Multi-Media Cards (eMMC) offer high storage capacity for IoT devices, at a reasonable cost, with excellent performance and low power consumption. The cards use specifically designed controllers, allowing for better integration into application systems. In fact, eMMC’s performance is some of the most top rated, since it can process multiple tasks at once, effectively improving speed by up to 30 percent. The cards also offer a higher level of security, by expanding the common write-protect feature to prevent user data from being overwritten or erased without authorisation.

Embedded firmware requirements

IoT devices are controlled by coding implemented in firmware. According to Particle, an IoT platform builder, success in firmware design calls for a number of considerations and skills:

• Building a stable firmware architecture that is scalable and well documented, using professional firmware toolchains and firmware languages like C and C++.

• Designing for constrained systems like low-power MCUs with limited memory, no memory management, and no direct interfaces like keyboards or screens.

• Designing for stability and error recovery including application watchdog timers, error correction, and auto-recovery from system faults.

• Paying special attention to inputs and outputs – this includes sensor data gathering, digital signal processing, local compression, and storage of data.

• Minimising power consumption by writing firmware that allows the device to enter sleep modes and consume the bare minimum energy required.

• Optimising bandwidth for cellular communication from your device to the cloud.

• Revising firmware continuously with OTA firmware updates to improve stability and functionality of your fleet (this adds value without needing to change hardware).

Firmware management

Above, we mentioned the need to provision IoT edge devices with regular firmware updates. Imagine, for example, a huge marketplace where a deployment of beacons gives information about products on display. The location of these beacons might not be easily accessible, and their number makes it impossible for them to be programmed individually post deployment. Hence, when there is a new feature or a defect that mandates re-deployment, over the air (OTA) firmware upgrading becomes an important timesaving feature.

In fact, an Embedded Computing Design article has identified three primary reasons why device firmware should support OTA upgrading:

• Wide and heterogeneous device deployment: The number of devices, and different types of devices, play a very important role in a distributed network. A standardised OTA interface ensures reuse of the architecture across different nodes. Consider smart lighting in a stadium: while a huge floodlight and a hallway light may differ in their capabilities, a standard OTA interface over BLE can be reused across them.

• Changing requirements and new features: The IoT is a growing and rapidly changing market with new product requirements, and new features are added regularly. Security threats and privacy breaches are some of the biggest factors driving these changes. To protect against new virus attacks, device firmware can incorporate fixes and more secure algorithms through the OTA firmware upgrade process.

• Critical time-to-market needs: IoT systems have very short design cycles, and there is a need for constant innovation and deployment of the latest features. The general development process is to over-design the hardware to sustain expanding market requirements over a longer period of time. OTA firmware upgrade enables the deployment of solutions in phases. For instance, the initial design for a thermostat system can roll out quickly with just a thermal sensor and then with updates that enable the humidity sensor later on. Note that with this approach, the hardware design is a thought-through process with future rollouts considered at the architecture phase from a hardware perspective.

However, there can be pitfalls associated with OTA that product teams should be aware of. For example, the race to achieve the mass deployment of the product might prompt marketing to rely on the fact that firmware can be updated later. This in turn could lead to the release of unstable software or products that have not yet been fully validated or optimised.

In addition, frequent updates may not be well received by end users. Product teams should carefully weigh the impact of such decisions and apply restraint to not overuse OTA.

Fig. 2: Arm processors address a wide range of IoT applications Image via Arm

Software management

In the IoT ecosystem, first to market is a huge competitive driver, which can mean that security, quality and dependability can be sacrificed for speed to release.

According to Information Age, organisations need to adopt four important IoT software development practices to meet demands, avoid pitfalls and achieve success:

Review: Proper code review and repetitive testing need to be a priority. Manufacturers must communicate this message to software engineering teams and call for stricter software quality measures.

The high complexity of IoT applications leaves software susceptible to security lapses and software quality failure.

One bad transaction between an application, a sensor, and a hardware device can cause complete system failure. Organisations just can’t afford that.

Assessment: Continuous deployment in the connected world is business as usual. Updates occur non-stop and are often pushed multiple times a day.

The quality assurance burden on the software that interacts with IoT devices is greater than ever. If the software isn’t continuously monitored and the code evaluated, failure is almost guaranteed.

Responsibility: Management must take responsibility for quality assurance. Any manufacturer that doesn’t have a set of analytics to track its software risk – be it reliability, security or performance – is negligent in its responsibility to customers and other stakeholders.

Management needs to lead by example and communicate the direct link between software quality and security. It’s in their best interest, too, since security vulnerabilities caused by poor coding or system architectural decisions can be some of the most expensive to correct.

Advocacy: In addition to measurement and analytics, a cultural shift to include education needs to occur. Developers and management collectively need to spread the word in the community about standards.

Significant strides have been made in creating initiatives for manufacturers and IT departments to consistently measure the quality of their software.

In 2015, the Object Management Group (OMG) approved a set of global standards proposed by the Consortium for IT Software Quality (CISQ) to help companies quantify and meet specific goals for software quality.

Power management

Minimising a SoC’s power demand as much as possible is nearly always a priority for IoT edge device designers, especially if the device is battery-operated, or relies on energy harvesting; excessive power demand will drain the battery too quickly, and may also cause overheating problems.

A white paper published by Sparsh Mittal, titled ‘A Survey of Techniques for Improving Energy E?ciency in Embedded Computing Systems’ describes a set of techniques for reducing power consumption:

• Voltage reduction – including dual-voltage CPUs, dynamic voltage scaling and under-voltage

• Frequency reduction – including underclocking and dynamic frequency scaling

• Capacitance reduction – including increasingly integrated circuits that replace PCB traces between two chips with relatively lower-capacitance on-chip metal interconnect between two sections of a single integrated chip; low-k dielectric, etc.

• Techniques such as clock gating and globally asynchronous locally synchronous (GALS), which can be thought of as reducing the capacitance switched on each clock tick, or as locally reducing the clock frequency in some sections of the chip

• Various techniques to reduce the switching activity – number of transitions the CPU drives into off-chip data buses, such as non-multiplexed address bus, bus encoding such as Gray code addressing, or power protocol

• Sacrificing transistor density for higher frequencies.

• Layering heat-conduction zones within the CPU framework

• Recycling at least some of the energy stored in the capacitors (rather than dissipating it as heat in transistors) – including adiabatic circuit and energy recovery logic

• Optimising machine code – by implementing compiler optimisations that schedules clusters of instructions using common components, the CPU power used to run an application can be significantly reduced

Antenna design

Antenna design is another area that is being made more challenging by the demands of IoT applications. Both space and available power are shrinking, but unless these factors are countered with ever-improving antenna design, the reliability of the device’s wireless communication will become compromised. This problem is often exacerbated by extreme interference and cosite conditions.

Fig.3: Always handle circuit boards by the edges – Image via Circuit Technology Centre

An article in ‘Microwaves&RF’ describes how weighing cost, size, design effort, and manufacturing complexity for IoT modules make an engineer’s design decisions much more challenging. Common IoT antenna typologies range from printed circuit board (PCB) designs to prefabricated chip antennas.

Security in IoT edge devices

IoT edge devices, like any other online equipment, need protection against hacking and cyber-attack. Issues include protection of critical assets, safe crypto implementations, secure remote firmware updates, firmware IP protection and secure debugging. Such protection is available at hardware CPU level.

For example, the Cortex-M33, Cortex-M23 and all of the Cortex-A processors include Arm TrustZone technology to provide a secure foundation in the SoC hardware. TrustZone is a widely deployed security technology, providing banking-class trust capability in devices such as premium smartphones. While it includes cryptographic instructions for efficient authentication and protection, the Cortex-A32 can also be coupled with TrustZone CryptoCell-700 series products to enable enhanced cryptographic hardware acceleration and advanced root of trust.

Electrostatic discharge (ESD)

Certain components used in electronic assemblies are sensitive to static electricity and can be damaged by its discharge (electrostatic discharge or ESD). If touched by a person having a static charge potential at the right solder joint or conductive pattern, the circuit board assembly can be damaged as the discharge passes through the conductive pattern to a static sensitive component. Note that usually the static damage level of less than 3,000V for components cannot be felt by humans.

Problems can also be caused by electrical overstress (EOS) arising from generation of unwanted energy. EOS can be caused by spikes from soldering irons, solder extractors, testing instruments and other electrically operated equipment. This equipment must be designed to prevent unwanted electrical discharges.

ESD/EOS safe work areas should protect sensitive components from damage by spikes and static discharges. An article by Circuit Technology Centre, Inc. summarises, for reference only, handling and storage methods to be followed within these safe areas, as below:

• Circuit board assemblies must always be handled at properly designated work areas.

• Designated work areas must be checked periodically to ensure their continued protection.

Areas of main concern include:

- Proper grounding methods.

- Static dissipation of work surfaces.

- Static dissipation of floor surfaces.

- Operation of ion blowers and ion air guns.

- Designated work areas must be kept free of static generating materials including Styrofoam, vinyl, plastic, fabrics and other static generating materials.

- Work areas must be kept clean and neat. To prevent contamination of circuit board assemblies, there must be no eating or smoking in the work area.

- When not being worked on, sensitive components and circuit boards must be enclosed in shielded bags or boxes.

There are three types of ESD protective enclosure materials including:

- Static Shielding – Prevents static electricity from passing through the package.

- Antistatic – Provides antistatic cushioning for electronic assemblies.

- Static Dissipative – An "over-package" that has enough conductivity to dissipate any static build-up.

- Whenever handling a circuit board assembly, the operator must be properly grounded by one of the following:

- Wearing a wrist strap connected to earth ground.

- Wearing two heel grounders and have both feet on a static dissipative floor surface.

- Circuit board assemblies should be handled by the edges. Avoid touching the circuits or components. (See Figure 3)

- Components should be handled by the edges when possible. Avoid touching the component leads.

- Hand creams and lotions containing silicone must not be used since they can cause solderability and epoxy adhesion problems. Lotions specifically formulated to prevent contamination of circuit boards are available.

- Stacking of circuit boards and assemblies should be avoided to prevent physical damage. Special racks and trays are available for handling.

Conclusion

The IoT is still a new phenomenon, and as such is still creating plenty of opportunities for edge product and system designers in home automation, industrial, medical and many other applications. While this novelty is attractive, it can tempt some organisations into areas where they have limited experience. This creates risk at many levels, from project planning and management, through detailed hardware, software and firmware design, to deployment and provisioning.

In this article we have looked at these product life cycle issues, while highlighting the areas of risk and how to mitigate them.

Contact Details and Archive...